Jake Oleson directs ‘Pour Your Heart Out’ for RL Grime, featuring 070 Shake.

Filmed in Brooklyn, NY.

Words from Jake below.

What technologies did you use to create ‘Pour Your Heart Out’ and what were you exploring creatively in application?

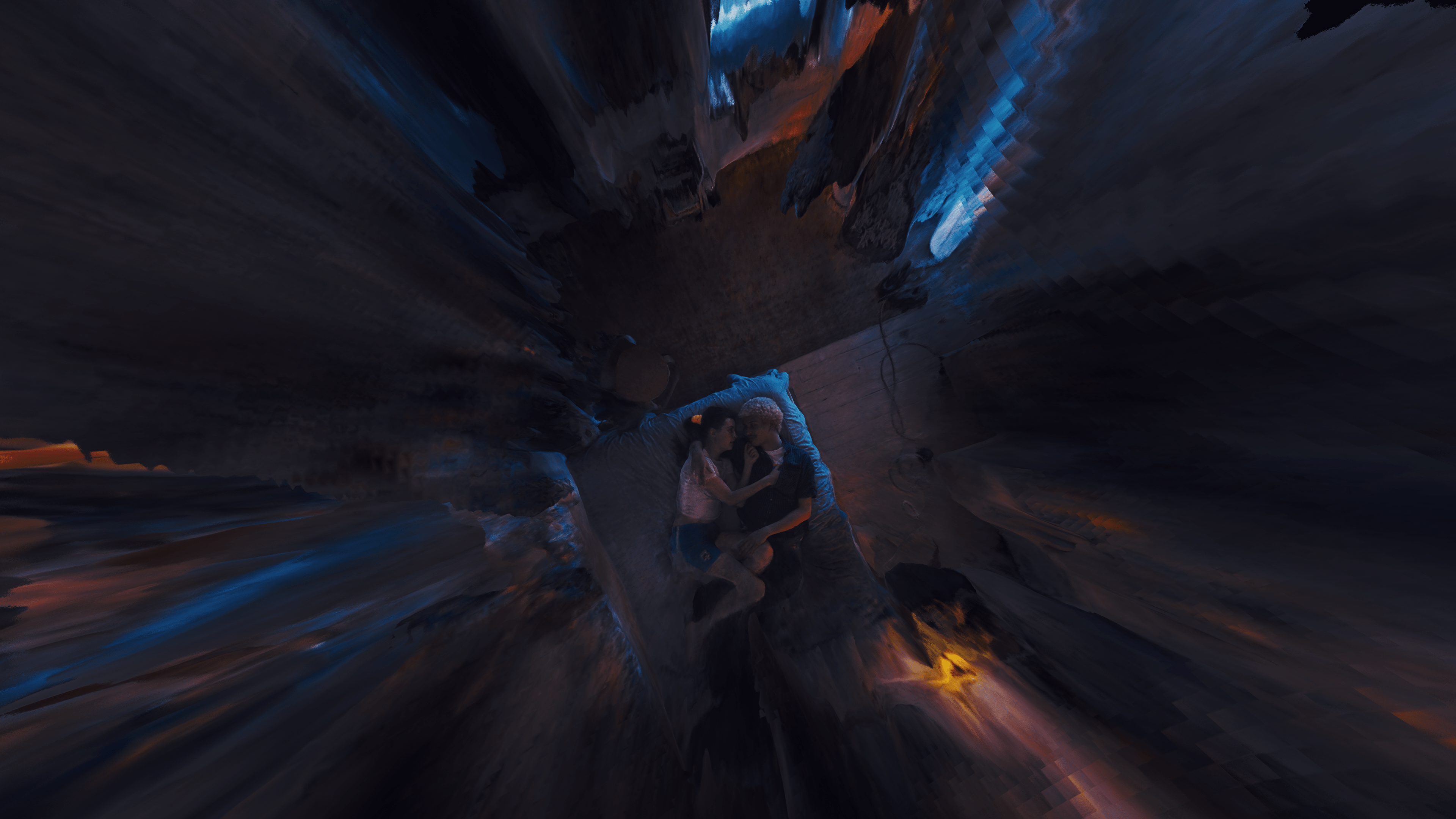

We made “Pour Your Heart Out” using a machine learning technology called Neural Radiance Fields (or NeRFs) that has recently been democratised with a consumer app called Luma AI. Like photogrammetry, NeRFs utilise a dataset of images to construct photorealistic 3D models. However, the distinguishing factor is their dynamic nature—they capture shadows, reflections, and light in a strikingly real way.

The distinguishing factor is their dynamic nature.

I came across NeRFs in the fall of last year and noticed they were mostly being used to create flashy short form internet content. No one was really applying them as a storytelling tool yet and the possibilities seemed endless.

My first NeRF project “Given Again” was a no-budget experimental film to begin exploring what’s possible with NeRFs. “Pour Your Heart Out” was a step up where I could try to push NeRFs even further in a larger production setting. I’m less interested in trying to achieve the cleanest NeRFs and more interested in using it as a creative tool to express a character’s emotional world. “Pour Your Heart Out” became about exploring our desire to alter our internal state. How we take drugs to achieve a feeling but it never quite breaks beneath the surface. It isn’t until the girl has a real human connection that a fire erupts inside of her and she’s able to feel something real again.

Can you talk about scene capture, what differences and comparisons can be drawn from traditional shooting?

The process was quite similar to a traditional live-action shoot, complete with a shot list and rough storyboards for each scene. However, we operated at a much faster pace, similar to a stills shoot.

The scanning process involves the actors and extras staying completely motionless for 2-3 minutes as we captured them from various angles. We had to be mindful of the positions and expressions an actor could realistically hold. Yet, not having to repeat actions, nail timing, or worry about composition allowed us to move quickly. Our speed helped us to steal most locations, shoot twice the number of scenes, and experiment more freely on set. We worked with a skeleton crew for the first day and expanded slightly on the second day to properly light the club and apartment scenes.

Waiting to see the results feels sort of reminiscent of shooting on film.

Because NeRFs don’t perform as well in low light, my cinematographer Jeremy Snell and I invested time in camera tests to find the right combination of capture practices and gear to achieve the best results. Even still, some NeRFs were far too abstract to fit into the video. Waiting to see the results feels sort of reminiscent of shooting on film. You don’t know what you’re going to get and while some shots simply don’t turn out as expected, others are beautiful surprises.

I’m curious about the camera moves, do you have total freedom? How are they constructed, and what logic are you using to build them?

Despite being a consumer app, Luma offers considerable flexibility with only a few restrictions on specific camera movements. Beyond those, you can move the camera at any speed and shoot at any focal length. I programmed all of the camera moves in their field editor in Google Chrome! It’s kind of mind-blowing.

Shot design became about embracing or juxtaposing the energy of the track, while trying to clearly communicate the story.

There wasn’t as much time in post to establish as much of a visual language/logic as I would have liked. It was more of a “try to throw a cohesive story together in 3 days that won’t make people nauseous from frenetic camera movements” situation. But because it takes so little time to render shots and test them in the edit, I could feel out the pacing and composition without overthinking it. Shot design became about embracing or juxtaposing the energy of the track, while trying to clearly communicate the story.

Editing with NeRFs feels almost like daydreaming – you have an idea for a sequence and you just do it.

Could you walk us through the linear production process for a project like this?

- Ideation

- Camera tests

- Pre-Pro

- Production

- Train NeRFs

- Shoot coverage of NeRFs

- Edit / Shoot more coverage

The video occupies a tangible three-dimensional space. What are your thoughts on the future of VR, and its convergence with other emerging technology?

It might take time for different storytellers to determine how to translate a narrative or experience into a more interactive and freeform space. But I think VR allows for even greater opportunities to leave lasting impressions on an audience than movies. Film is this magical “empathy machine” that already has the power to transport us into different worlds, yet we are always spectators of these stories.

By experiencing stories and art as active participants, we could shift the way we perceive ourselves.

Perhaps VR can tap into the ways we’re innately wired to learn, providing us with agency, and engaging us in critical thought. By experiencing stories and art as active participants, we could shift the way we perceive ourselves. Especially as generative AI tools and AI-chat bots make creating these immersive experiences more accessible.

I think the democratisation of creating visual spectacle is both exhilarating and daunting. As the internet is flooded with visually arresting AI-powered imagery, I believe there will be an even greater craving for narratives that deliver meaning. At least I hope so!

What are you reading at the moment?

“Love Saves the Day” by Tim Lawrence.

- Jake Oleson

- Director

- Dan Streit, Tiffany Izzie Chang

- Executive Producer

- Adam Braun

- Producer

- Jeremy Snell

- Director of Photography

- Jared Rosenthal

- Colourist